Approaches to Achieving Near Real-time Information Assurance

Dr. Bruce C. Gabrielson, NCE

Booz Allen & Hamilton

900 Elkridge Landing Road, Suite 300

Linthicum, MD 21090

Abstract

This paper describes an approach using intelligent agents to enhance information assurance (IA) in large networks. In particular, the security of large networks is difficult to manage in the face of non-standard configurations, changing technologies, limited resources, and ever-increasing threats. Realities in a practical world now dictate quick efficient solutions just to keep the network functioning. Various organizations are currently evaluating and developing tools and techniques to address this challenge. The current emphasis on reactive security is being replaced with an urgent need for the combination of proactive protection, immediate detection and automated corrective action. By using an agency (a group of intelligent agents) to address near real-time configuration changes, remote network testing, and remote automated configuration tools, a full near teal-time protect, detect, react and restore capability is possible.

Introduction

By using agency software agents that can securely communicate with network-based security tools, host and network sensors, frameworks, and other agents, it is now possible to achieve realistic comprehensive protect, detect, react and restore capability. This paper addresses the near real-time security improvement approach using intelligent agents that is currently being applied to enterprise-wide computing. Specifically, the paper will address:

The Need

Better security and configuration management drives the need for timely, more responsive, and interoperable security oriented tools and techniques. Since the network can be attacked in a number of ways, resulting in differing degrees of damage, no one tool or technique has been developed that can cover all possible threats. The natural progression of current security tools and techniques has been keyboard inputs to scripts, to automated scripts, to host-based tools, to proactive network test and detection tools, to centralized frameworks for multiple tools, and finally to the emerging concept of IA oriented intelligent agents. The ultimate goal of industry and Government IA programs at either the local network level or the enterprise level has been to implement a visible, comprehensive, and layered approach wherein the host is internally configured for maximum protection, the network is protected from external and internal threats, that attacks, should they occur, are detected and responded to immediately with as little human intervention as possible, and that networks can be restored quickly to a protected operational state.

Both Government and industry have recognized the need for tools to spot, identify and alert security administrators to active attacks in real time for several years. The current thrust also recognizes that there is a need to respond and restore as quickly as possible. Considerable research has been invested by organizations like DARPA, DISA, and the Joint Program Office, plus corporations like Intrusion.com, SAIC, BTG, HCL Comnet, Axent Technologies, ISS, and so on to develop solutions that address the entire spectrum of IA needs and interests. Recognizing that new threats are constantly emerging, the ongoing research and development efforts of these and other organizations are now beginning to achieve some degree of success.

IA Problems

In the real world there are both practical and technical problems with maintaining strong IA. On the practical side, users need IA tools that are easy to understand and don't use up much processing capability. One the technical side, technology advances result in equipment and configuration changes that are increasingly difficult to manage in real-time. In a moving window of customer needs, standard configurations that can accommodate all emerging applications don't exist. At the working level, network operation has often become more important than network security.

Available intrusion detection and test tools that support IA are mostly reactive in nature. They are primarily focused on monitoring the what, where, how and who of a security-related trigger for attacks or disruptions. Some network sensors apply predefined rule sets or attack "signatures" to the captured frames to identify hostile traffic while many host and network based tools look at audit files to detect problems. Other proactive type test and monitor tools are available that will either directly simulate an attack on known network vulnerabilities, or will continuously monitor for configuration changes to a particular network. In nearly all cases, these detection and monitor tools involve trained human intervention, are not comprehensive in their coverage, take time to run, and can generate large data files.

One of the biggest problem areas with current IA approaches is human intervention and data overload. As the table below suggests, getting the knowledge needed to the right individual in order to properly carry out the required range of protection or reaction activities when attacks or even initial vulnerability triggers are first detected is an issue, particularly if you don't have an individual available. Breaking this problem down further, one could conclude that a two-tier approach is needed. First, handle as many activities as possible using "smart" automated approaches that don't require human intervention. Second, develop adaptive means of detecting triggers such that when trigger indicators from local processing require more in-depth analysis, then this detailed analysis can be quickly accomplished using available resources and capabilities. What this means is use a tool that can automate as much of the detection and response work as possible when time is critical, then use other tools that allow more robust analysis capabilities when there is a need and time is available.

Generating large amounts of raw data and then knowing how to interpret what is collected is the key for in-depth analysis. Local processing by a tool with significant IA analysis capabilities can handle detailed work. Additional needs required by security tools are the ability to signal detection triggers to other receptors or frameworks where this information is critical.

The extraction of information from large raw data sources is also useful. Data mining is an emerging means of finding intelligence when too much information exists, particularly when there is a need to detect broad-based attacks at the enterprise level. There is also the need to perform more detailed analysis when initial conditions warrant further action. When a complicated intelligent decision is needed from a real person, data mining allows automated acquisition, generation and exploitation of knowledge from large volumes of heterogeneous information.

The ultimate goal for maintaining a real world IA posture would be to turn an automated (but controlled and directed) tool loose to find and report the related information or patterns needed as close to real time as possible. Other "smart" tools could then be used to gain additional knowledge about the environment or about some specific security related condition, or even immediately correct certain conditions should they be detected. Also, the ability to data mine audit files and transmit the analyzed information would eventually become a significant tool in the security framework.

What Are Intelligent Agents

An intelligent agent is basically a program that can perform a task based on intelligence learned through active or passive means or through rules provided. They are able to independently evaluate choices without human interaction. Many agents have been developed in recent years that act as simple detection sensors supporting larger centralized applications. The emerging class of newer agents can do more than simply detect and alert, they can act.

When several agents are put together, they form an agency, capable of a combined range of actions. The agency picks the right agent needed to perform a specific task, using the network itself to do the processing. In other words, the agent represents the user to select and complete a required task through ruled-based criteria while using and interacting with other programs and data.

Fast agents are those that have a single task. To be effective in a large, ever changing IA environment, the primary change discovery agents need to be adaptive, fast and focused. These agents should collect and interpret (analyze and process) the initial conditions regardless of how the conditions evolved, be able to discover changes, and then be able to report specific findings to other supporting agents and tools. They should also maintain raw and processed data should the data be required by other agents or observers. Supporting agents and tools can then be a mix and match of detectors, reactors, investigators, and controllers. Control agents can be used to manage agent interactions for many problems with or without the need of human intervention. When major problems require direct intervention, observers can then direct more comprehensive investigations or responses in a focused manner through the use of frameworks.

Agents have been developed to support and are a natural extension for implementing other complementing and evolving technologies. In the future as these other technologies evolve, so to will the corresponding capability of associated agents to deliver these technologies. Some supporting and complementing technologies include:

Agents consist of a common architecture such as shown in Figure 1. The knowledge base contains the knowledge that has been generated as well as rules that are being followed. Libraries contain information the agent has identified. Application objects are the resources available to the agent (test tools in our case). The adapter serves as the standardized interface for the tools. The views are basically who or what the agent is capable of delivering to its user.

Figure 1 - Common Intelligent Agent Architecture

Developing an Advanced Agency Posture for Protect/Detect/React and Restore

The ultimate objective of a near real-time network security agency would be to have the various individual agents act independently, based on pre-defined or even newly discovered conditions, to react and protect against discovered threats, all with little or no human intervention. What are some of the concepts and techniques that have led to the use of agents in achieving these objectives?

With this focus, discovery and countermeasure agents can be and have been developed for all layers of the Defense-in-Depth strategy. Existing tools are currently being turned into agents and new agents supporting encrypted communication channels have been or are being developed that address:

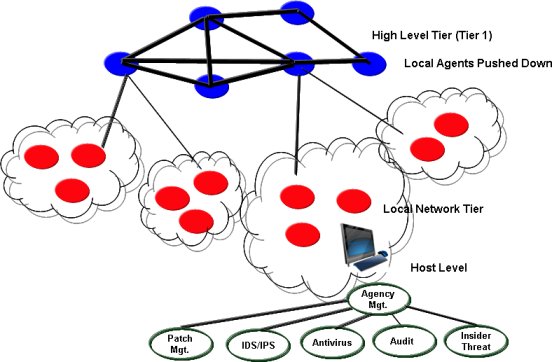

Figure 2 describes the applicable locations for these agents within the networked architecture. Note that frameworks for higher levels of protection are based upon defense-in-depth at lower levels of protection. Multiple agents can be deployed at all layers within the Global Information Grid (GIG), each in-turn interacting with other associate agents at their level or within a hierarchy to perform their job regardless of where they are located. When this distributed agency approach is used, the network itself becomes the processing environment for each agency. Centralized processing gives way to distributed or centralized "visualization" of the IA state of the network or enterprise.

Figure 2 - Agent Locations

Today's Agents

The use of agents has been realized in the IA industry. We currently have numerous IA oriented agents or agent architectures such as TROVER, the NTSecWiz Agent, Kane Secure Enterprise, EMS, Emerald, Sapphire, and InfoSleuth that are beginning to cover the entire Defense-in-Depth spectrum of detect, protect, react, and respond from host to enterprise. These agents initially operated independently, but are now being combined into agencies to enhance their overall IA capabilities. Below is a brief description of some of today's exciting products.

Designed for DISA by SAIC, Trover is a rule base driven configuration management and security agent with supporting library. It has the ability to communicate securely with other agents. It's most effective as a quick audit and compliance validation tool that offers significant additional benefits to those organizations with large network security audit needs. It can be used as a network/security tool that discovers information indicators including the location and description of all listening TCP ports on a (remote) host. Trover profiles the initial baseline and then sequentially scans and compares to detect potentially vulnerable changes to the initial baseline. Results provide both the initial baseline snapshot, and the history of configuration change snapshots.

Also developed for DISA by SAIC, the agent configured NT Security Wizard (NTSecWiz) supports expert security analysts in engineering a secure Windows NT platform. In addition, it helps system administrators in the maintenance of their secured NT configurations. The NT Security Wizard Agent is a supplement to the NTSecWiz. It allows automated remote policy enforcement as a result of agent communicated dynamic responses. The NTSecWiz performs in-depth security policy conformance analysis and object analysis.

Intrusion.com's Kane Secure Enterprise (formerly the Computer Misuse Detection System or CMDS) is a comprehensive set of sensors and agents. It was initially designed to provide System Administrators and System Security Officers with capabilities to perform security relevant audit reduction and analysis as well as audit file management within networked computer enterprises. CMDS utilizes an expert system, statistical profiling, and historical reporting mechanisms to help identify unauthorized activities and/or misuse of system information and resources. CMDS also supports the security audit collection and analysis requirements for Trusted Software development. While CMDS consists of sensors and a central framework, it also can support a secure agent communications channel to enable other agency interactions.

Axent's Enterprise Security Manager (ESM) is a centralized security framework with supporting agents and sensors that gives a security administrator the resources to effectively manage security on enterprise wide, multi-platform networks from a single location. A policy enforcer, ESM provides the management and control necessary to bring enterprise networks into compliance with preprogrammed corporate information security policies. Using client server technology, managers "instruct" local host based ESM agents on what security checks to perform. The agents work with the OS on which they are installed to insure maximum security. No modifications are made to the OS kernel or any utilities that may be present.

Under development at DARPA, Emerald monitors support intrusion-detection services in the form of integrated network surveillance modules that are independently tunable and dynamically deployable to key observation points throughout large networks. Emerald's analysis scheme targets the external threat agent who attempts to subvert or bypass a domain's network interfaces to gain unauthorized access to domain resources or prevent the availability of these resources. The monitors can operate independently to target specific analysis objectives (e.g., protection of a specific network service), and can also inter-operate in an analysis hierarchy to correlate their alarms in a way that facilitates the detection of multi-domain or coordinated attacks. Emerald's signature and statistical analyses are not performed as monolithic analyses over an entire domain, but rather are deployed sparingly throughout a large enterprise to provide more focused protection of specific key network assets. Most important to future applications, Emerald is geared towards new techniques in malicious alarm correlation and management of intrusion-detection services in agency configurations when many independent agents provide correctable indicators.

Currently being developed by the Joint Program Office, the Sapphire framework and agents allow near real-time detection, reaction, restoration, and in-depth investigations of intrusions. The Sapphire framework currently incorporates some of the above agents, plus is designed so other agents can be easily and immediately incorporated into it's existing hierarchical structure. As with Emerald, Sapphire forces most analysis and processing into the lowest level of the network and only relies on views for higher level authorities.

When an agency-based framework is implemented, centralized data processing and raw data storage can be achieved to some extent. However, at some point overload will be reached and the scalability of centralized processing becomes useless. Since raw data can exist in many forms, each of which requires a special agent or tool designed to find, acquire, interpret and extract the related data into a useable and common format, the problem of remote information gathering becomes a complex task. There will also continue to be the need to remotely analyze or monitor a particular situation to a greater degree then current IA agents can accommodate. Data mining agents have been developed to perform this function.

InfoSleuth was developed as a multi-use data mining tool that allows for complex like queries to run against heterogeneous sources which may be located on disparate operating systems. It consists of an agent-based infrastructure that can be used to deploy agents for information gathering and analysis over diverse and dynamic networks of multimedia information sources. It may be viewed as a set of loosely inter-operating, though cooperating, active processes distributed across a network. InfoSleuth is evolving as a means of capturing and analyzing broad-based audit and other intrusion detection information by mining raw data at its local source.

Tomorrow's Agents

The key to future agent development will be more "bang" with less human intervention. Regardless of the problem, find what's wrong, get it fixed, and get the resource up and running again as quickly as possible. Current technology gaps include hierarchical visualizations, the complexity of agent tasks that can be performed, the ability to perform many secure remote tasks, and the ability to mine (and visualize the results of) large raw data sources remotely. Future research should continue to be focused on improved Defense-in-Depth coverage: enhancing the visualization, interfacing and control capabilities of agencies for both automated network configuration and test tools, as well as enhancing the analyzing and reporting capabilities of various detection agents.

Another significant effort should involve enhancing the ability of remote agents to data mine disbursed raw data sources, concentrate and pre-analyze collected raw data across the enterprise in order to reduce the amount of information transmitted to higher levels of authority. The intent of this effort will be to develop the independent detect and react security agency intended for broad application to hierarical large-scale network. Still another focus of future research could be the development of agents that can both harden and restore systems at the host level when attacked. These agents could be intended to force the system to fix itself and keep running in the face of significant obstacles.